Abstract

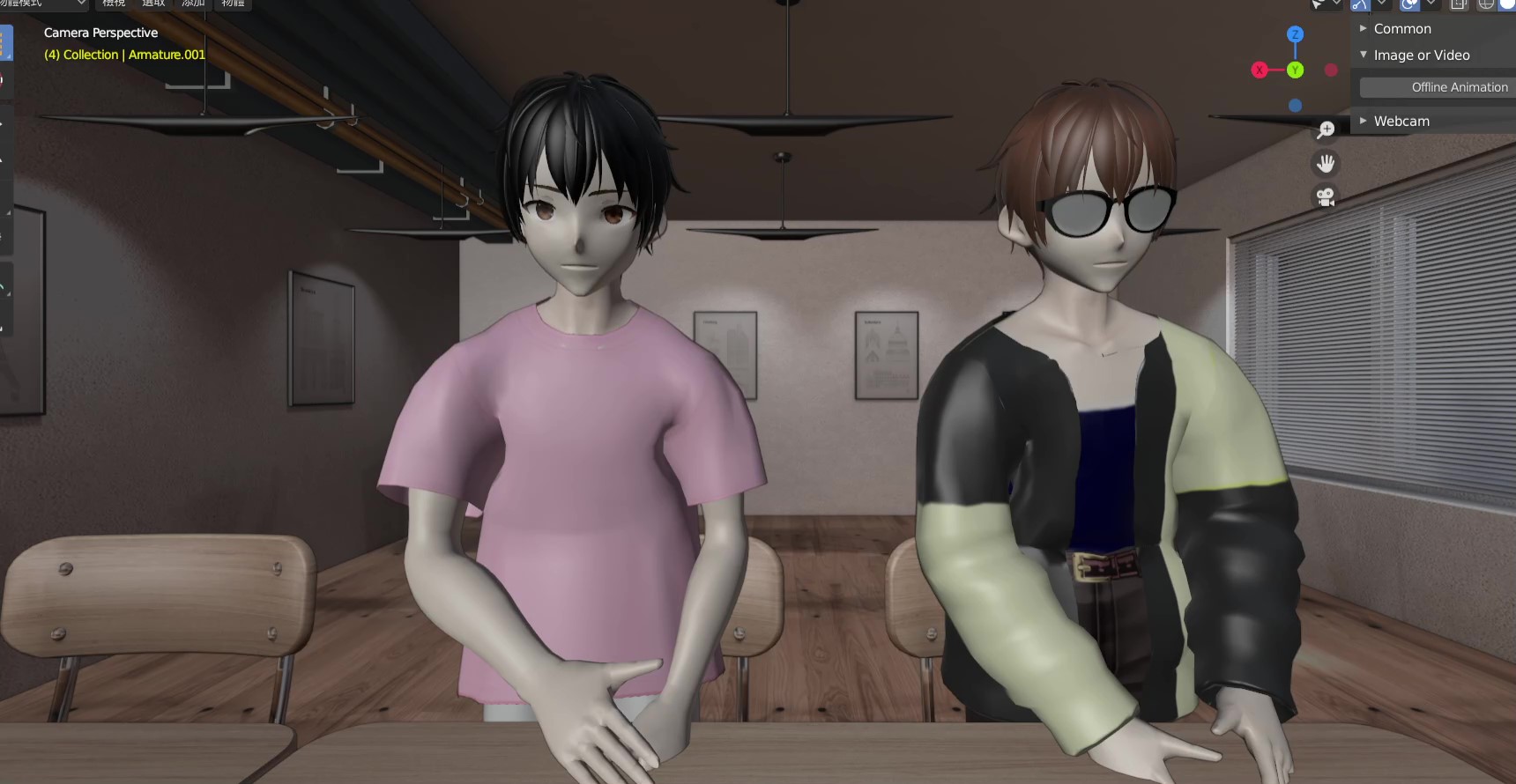

This study developed a real-time one-stage pose reconstruction system that can use a single Logitech webcam or video as input and control the motion of virtual characters in the Blender. It is based on ROMP, a one-stage, multi-branch pose reconstruction regression network. This study extended the single-person ROMP Blender virtual character control system, letting it control 2~3 virtual characters simultaneously. The field of 3D Human Pose and Shape Estimation focuses on improving network architectures and datasets, but it lacks practical application in virtual live streaming. Also, the optical marker-based motion capture systems require high costs and take 10~15 minutes to set up. As a result, this study developed a deep learning-based solution. It will be applied in the interview-style program. The performance achieved in using a webcam is 14~20 frames per second, while in the case of using videos, it is 12~17 frames per second. This study significantly reduces the cost of motion capture systems from 5 million to 1,000 New Taiwan Dollars and decreases the system setup time from 10~15 minutes to 3 minutes.